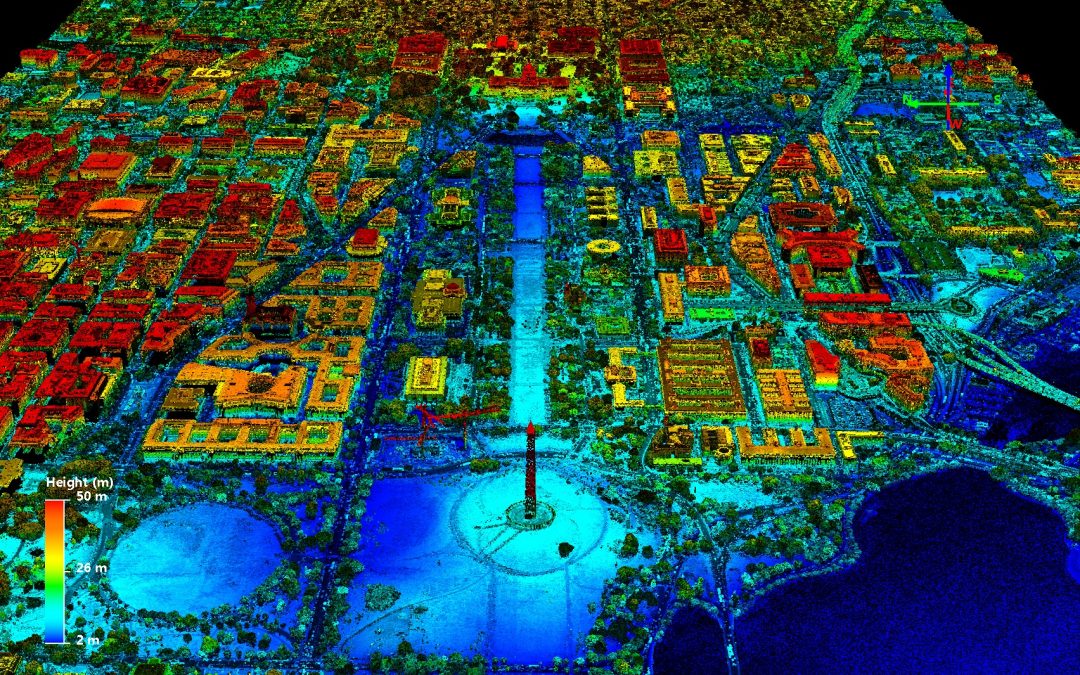

LiDAR Point Image of Washington, DC: USGS.gov

Blog Editor’s Note:

Last summer we saw a paper from researchers who spoofed all the GNSS constellations at once, and at the very modest price of $400. – If you are on a fixed income and $400 seems to be a lot, think about the tens of billions of dollars invested to produce GNSS signals.

The next month we saw a paper from researchers who decided that spoofing the signal might not be enough to mislead a vehicle driver. So they figured out how to also send a false map that looked like were the driver was, but, along with spoofed GNSS signals, would help misdirect the target vehicle. Just perfect for kidnapping, stealing cargo, or luring a driver into some other dangerous situation.

This summer we saw a paper from Regulus that reported on their ability to cause a Tesla in auto-drive mode to suddenly brake, accelerate, and exit the highway early and at the wrong point (we understand their co-worker in the car was scared silly). Thanks to the car’s lane-keeping cameras, they were not able to direct the car off the road.

In addition to cameras, many automated driving systems use LiDAR (a radar-like sensor) to sense distance to other objects and help keep the car on the road. Sensors like cameras and LiDAR that do not require a network connection or data from outside the vehicle are critical for lane keeping, collision avoidance and other fail-safe functions. Below is a report on a paper that shows that LiDAR can also be fooled.

Turns out that no PNT system or sensor is fool-proof. We have even seen papers on how to spoof inertial sensors.

This might be a good time to reiterate our call for a more complete architecture of positioning, navigation, and timing sources with greatly differing phenomenologies as the best route to resilience, reliability, and safety. See our graphic here.

Yes, users will have to get the equipment and then access the architecture’s various systems, but that’s a lot better than not having them available at all.

Medium.com

Researchers Fool LiDAR with 3D-Printed Adversarial Objects

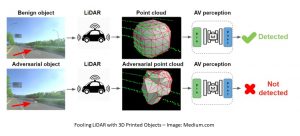

Despite the very high cost of LiDAR, most players in the self-driving technology market regard the advanced spacial surveying technology as an indispensable sensor option for autonomous vehicles. Unlike cameras which can be negatively affected by bad weather or low-light conditions, LiDAR produces accurate, computer-friendly point cloud data across a wide range of conditions and can complement other sensors to improve safety for self-driving vehicles.

However, like most other tech, LiDAR is also vulnerable to hackers. To illustrate this, researchers from Baidu Research, the University of Michigan, and the University of Illinois at Urbana-Champaign have published a method for generating adversarial objects that can befuddle the LiDAR point cloud and compromise security in vehicles using the tech.

The researchers first employed an evolution-based blackbox attack algorithm to demonstrate the vulnerabilities of LiDAR sensors, then applied their new gradient-based approach LiDAR-Adv to explore the effect of powerful adversarial samples. They tested the efficacy of LiDAR-Adv on Baidu’s home-grown Apollo autonomous driving platform and in the real world. What’s scary is that LiDAR-Adv did not only fool LiDAR in simulated environments, 3D-printed adversarial samples also went undetected by LiDAR in the real world.

LiDAR-based detection systems consist of multiple non-differentiable steps rather than a single end-to-end network. This significantly limits the effectiveness of gradient-based end-to-end attacks and had so far helped protect LiDAR from adversarial attacks. To create effective adversarial samples, researchers faced three challenges :

- A LiDAR-based detection system uses a solid LiDAR device to map a 3D shape onto a point cloud, which is then fed into a machine learning detection system. Therefore, how shape perturbation could affect the scanned point cloud is unclear.

- Since the gradient-based optimizer does not work on preprocessing of the LiDAR point cloud, a new optimizer is needed.

- The perturbation space is subject to various restrictions.

To meet these challenges researchers first simulated a differentiable LiDAR renderer that could link perturbations of 3D targets to a LiDAR scan (or point cloud). Then they made 3D feature aggregations using the differentiated proxy function. Finally, they designed different losses to ensure that the generated 3D adversarial samples were smooth. The results show that with 3D sensing and product-level multi-stage detectors, researchers are able to mislead an autonomous driving system.

To better demonstrate the flexibility of LiDAR-Adv attack methods, researchers used two additional evaluation scenarios:

- Hidden target: synthesize an adversarial sample that will not be detected;

- Change Label: synthesize an adversarial sample that is recognized as a specific target.

In the experiments researchers succeeded in creating undetectable targets, exposing vulnerabilities in LiDAR detection systems through an evolution-based black box algorithm. Researchers then also demonstrated the qualitative and quantitative results of the LiDAR-Adv method in a white box setting. Moreover, the experiment results showed that the LiDAR-Adv method can also achieve other malicious and potentially dangerous targets such as “Change Label”.

The paper Adversarial Objects Against LiDAR-Based Autonomous Driving Systems is on arXiv. Further information on the experiments can be found here.

Author: Reina Qi Wan | Editor: Michael Sarazen